Analysis of Human Error in Object Detection Dataset Labels

Main Article Content

Abstract

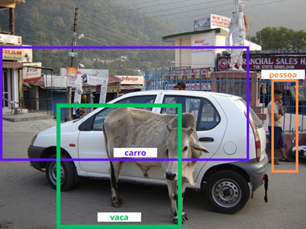

Object detection is an important task in computer vision, applied in areas such as video surveillance, autonomous vehicles, and facial recognition. Advances in convolutional neural networks and deep learning have improved the accuracy of detection models, but the effectiveness of these systems depends on the quality of labeled datasets. Manual image annotation, especially in complex scenes with multiple objects, is a labor-intensive and error-prone process, which can compromise the performance of trained models. In this study, 30 participants conducted a labeling experiment, analyzing the influence of image complexity across three difficulty levels. Level 1 involved up to 4 objects, Level 2 from 4 to 10 objects, and Level 3 more than 10, with variations in the area occupied by each object and the classes represented. The results showed that increasing image complexity significantly raised the number of errors made, with variations in means and standard deviations. These findings highlight that discrepancies in manual labeling by different individuals can compromise the effectiveness of detection models.

Downloads

Download data is not yet available.

Article Details

How to Cite

dos Santos, D., Silva, A., de Souza Silva, L., Maciel de Sousa, R., Freire, A., & Torres Fernandes, B. (2025). Analysis of Human Error in Object Detection Dataset Labels. Journal of Engineering and Applied Research, 10(3), 43-54. https://doi.org/10.25286/repa.v10i3.3122

Section

Engenharia da Computação

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.